Imagine you’re a traveller in a strange land. A local approaches you and starts jabbering away in an unfamiliar language. He seems earnest, and is pointing off somewhere. But you can’t decipher the words, no matter how hard you try.

That’s pretty much the position of a young child when she first encounters language. In fact, she would seem to be in an even more challenging position. Not only is her world full of ceaseless gobbledygook; unlike our hypothetical traveller, she isn’t even aware that these people are attempting to communicate. And yet, by the age of four, every cognitively normal child on the planet has been transformed into a linguistic genius: this before formal schooling, before they can ride bicycles, tie their own shoelaces or do rudimentary addition and subtraction. It seems like a miracle. The task of explaining this miracle has been, arguably, the central concern of the scientific study of language for more than 50 years.

In the 1960s, the US linguist and philosopher Noam Chomsky offered what looked like a solution. He argued that children don’t in fact learn their mother tongue – or at least, not right down to the grammatical building blocks (the whole process was far too quick and painless for that). He concluded that they must be born with a rudimentary body of grammatical knowledge – a ‘Universal Grammar’ – written into the human DNA. With this hard-wired predisposition for language, it should be a relatively trivial matter to pick up the superficial differences between, say, English and French. The process works because infants have an instinct for language: a grammatical toolkit that works on all languages the world over.

At a stroke, this device removes the pain of learning one’s mother tongue, and explains how a child can pick up a native language in such a short time. It’s brilliant. Chomsky’s idea dominated the science of language for four decades. And yet it turns out to be a myth. A welter of new evidence has emerged over the past few years, demonstrating that Chomsky is plain wrong.

But let’s back up a little. There’s one point that everyone agrees upon: our species exhibits a clear biological preparedness for language. Our brains really are ‘language-ready’ in the following limited sense: they have the right sort of working memory to process sentence-level syntax, and an unusually large prefrontal cortex that gives us the associative learning capacity to use symbols in the first place. Then again, our bodies are language-ready too: our larynx is set low relative to that of other hominid species, letting us expel and control the passage of air. And the position of the tiny hyoid bone in our jaws gives us fine muscular control over our mouths and tongues, enabling us to make as many as the 144 distinct speech sounds heard in some languages. No one denies that these things are thoroughly innate, or that they are important to language.

What is in dispute is the claim that knowledge of language itself – the language software – is something that each human child is born with. Chomsky’s idea is this: just as we grow distinctive human organs – hearts, brains, kidneys and livers – so we grow language in the mind, which Chomsky likens to a ‘language organ’. This organ begins to emerge early in infancy. It contains a blueprint for all the possible sets of grammar rules in all the world’s languages. And so it is child’s play to pick up any naturally occurring human language. A child born in Tokyo learns to speak Japanese while one born in London picks up English, and on the surface these languages look very different. But underneath, they are essentially the same, running on a common grammatical operating system. The Canadian cognitive scientist Steven Pinker has dubbed this capacity our ‘language instinct’.

There are two basic arguments for the existence of this language instinct. The first is the problem of poor teachers. As Chomsky pointed out in 1965, children seem to pick up their mother tongue without much explicit instruction. When they say: ‘Daddy, look at the sheeps,’ or ‘Mummy crossed [ie, is cross with] me,’ their parents don’t correct their mangled grammar, they just marvel at how cute they are. Furthermore, such seemingly elementary errors conceal amazing grammatical accomplishments. Somehow, the child understands that there is a lexical class – nouns – that can be singular or plural, and that this distinction doesn’t apply to other lexical classes.

This sort of knowledge is not explicitly taught; most parents don’t have any explicit grammar training themselves. And it’s hard to see how children could work out the rules just by listening closely: it seems fundamental to grasping how a language works. To know that there are nouns, which can be pluralised, and which are distinct from, say, verbs, is where the idea of a language instinct really earns its keep. Children don’t have to figure out everything from scratch: certain basic distinctions come for free.

children don’t receive formal instruction in their mother tongue so how do they acquire competence in grammar?

Chomsky’s second argument shifts the focus to the abilities of the child. Think of this one as the problem of poor students. What general-purpose learning capabilities do children bring to the process of language acquisition? When Chomsky was formulating his ideas, the most influential theories of learning – for instance, the behaviourist approach of the US psychologist B F Skinner – seemed woefully unequal to the challenge that language presented.

Behaviourism saw all learning as a matter of stimulus-response reinforcement, in much the same way that Pavlov’s dog could be trained to salivate on hearing a dinner bell. But as Chomsky pointed out, in a devastating 1959 review of Skinner’s claims, the fact that children don’t receive formal instruction in their mother tongue means that behaviourism can’t explain how they acquire competence in grammar. Chomsky concluded that children must come to the language-learning process already prepared in some way. If they are not explicitly taught how grammar works, and their native learning abilities are not up to the task of learning by observation alone, then, by process of elimination, their grammar smarts must be present at birth.

Those, more or less, are the arguments that have sustained Chomsky’s project ever since. They seem fairly modest, don’t they? And yet the theoretical baggage that they impose on the basic idea turns out to be extremely significant. Over the past two decades, the language instinct has staggered under the weight.

Let’s start with a fairly basic point. How much sense does it make to call whatever inborn basis for language we might have an ‘instinct’? On reflection, not much. An instinct is an inborn disposition towards certain kinds of adaptive behaviour. Crucially, that behaviour has to emerge without training. A fledging spider doesn’t need to see a master at work in order to ‘get’ web-spinning: spiders just do spin webs when they are ready, no instruction required.

Language is different. Popular culture might celebrate characters such as Tarzan and Mowgli, humans who grow up among animals and then come to master human speech in adulthood. But we now have several well-documented cases of so-called ‘feral’ children – children who are not exposed to language, either by accident or design, as in the appalling story of Genie, a girl in the US whose father kept her in a locked room until she was discovered in 1970, at the age of 13. The general lesson from these unfortunate individuals is that, without exposure to a normal human milieu, a child just won’t pick up a language at all. Spiders don’t need exposure to webs in order to spin them, but human infants need to hear a lot of language before they can speak. However you cut it, language is not an instinct in the way that spiderweb-spinning most definitely is.

But that’s by the by. A more important problem is this: If our knowledge of the rudiments of all the world’s 7,000 or so languages is innate, then at some level they must all be the same. There should be a set of absolute grammatical ‘universals’ common to every one of them. This is not what we have discovered. Here’s a flavour of the diversity we have found instead.

Spoken languages vary hugely in terms of the number of distinct sounds they use, ranging from 11 to an impressive 144 in some Khoisan languages (the African languages that employ ‘click’ consonants). They also differ over the word order used for subject, verb and object, with all possibilities being attested. English uses a fairly common pattern – subject (S) verb (V) object (O): The dog (S) bit (V) the postman (O). But other languages do things very differently. In Jiwarli, an indigenous Australian language, the components of the English sentence ‘This woman kissed that bald window cleaner’ would be rendered in the following order: That this bald kissed woman window cleaner.

the ideophone ribuy-tibuy, from the northern Indian language Mundari, describes the sight, motion and sound of a fat person’s buttocks as he walks

Many languages use word order to signal who is doing what to whom. Others don’t use it at all: instead, they build ‘sentences’ by creating giant words from smaller word-parts. Linguists call these word-parts morphemes. You can often combine morphemes to form words, such as the English word ‘un-help-ful-ly’. The word tawakiqutiqarpiit from the Inuktitut language, spoken in eastern Canada, is roughly equivalent to: Do you have any tobacco for sale? Word order matters less when each word is an entire sentence.

The basic ingredients of language, at least from our English-speaking perspective, are the parts of speech: nouns, verbs, adjectives, adverbs and so on. But many languages lack adverbs, and some, such as Lao (spoken in Laos and parts of Thailand), lack adjectives. It has even been claimed that Straits Salish, an indigenous language spoken in and around British Columbia, gets by without nouns and verbs. Moreover, some languages feature grammatical categories that seem positively alien from our Anglocentric perspective. My favourite is the ideophone, a grammatical category that some languages employ to spice up a narrative. An ideophone is a fully fledged word type that integrates different sensory experiences arising in a single action: to pick one example, the word ribuy-tibuy, from the northern Indian language Mundari, describes the sight, motion and sound of a fat person’s buttocks as he walks.

And of course, language doesn’t need to be spoken: the world’s 130 or so recognised sign languages function perfectly adequately without sound. It’s a remarkable fact that linguistic meaning can be conveyed in multiple ways: in speech, by gestured signs, on the printed page or computer screen. It does not depend upon a particular medium for its expression. How strange, if there is a common element to all human language, that it should be hidden beneath such a bewildering profusion of differences.

As these unhelpful findings have emerged over the years, the language-instinct lobby has gradually downsized the allegedly universal component in the human brain. In a 2002 version, Chomsky and colleagues at Harvard proposed that perhaps all that is unique to the human language capability is a general-purpose computational capacity known as ‘recursion’.

Recursion allows us to rearrange words and grammatical units to form sentences of potentially infinite complexity. For instance, I can recursively embed relative-clause phrases – phrases beginning with who or which – to create never-ending sentences: The shop, which is on Petticoat lane, which is near the Gherkin, which… But we now know that humans are not alone in their ability to recognise recursion: European starlings can do it too. This ‘unique’ property of human grammar might not be so unique after all. It also remains unclear whether it is really universal among human languages. A number of researchers have suggested that it might in fact have emerged rather late in the evolution of human grammar systems, a consequence, rather than a cause. And In 2005, the US linguist-anthropologist Daniel Everett has claimed that Pirahã – a language indigenous to the Amazonian rainforest – does not use recursion at all. This would be very strange indeed if grammar really was hard-wired into the human brain.

So much for universals. Perhaps a more serious problem for the language instinct is the predictions it makes about how we learn to speak. It was intended to account for the observation that language acquisition seems fairly quick and automatic. The trouble is, it would seem to make the process a lot quicker and more automatic than it actually is.

When a child equipped with an inborn Universal Grammar acquires her first language, spotting a grammatical rule in her mother-tongue input should lead her to apply the rule across the board, to all comparable situations. Take the noun cat. Hearing a parent refer to the cat – using the definite article, the – should alert the infant to the fact that the definite article can apply to all nouns. The Universal Grammar predicts that there will be nouns, and maybe a strategy for modifying them – so the child expects to encounter this lexical class and is on the lookout for the strategy that English uses to modify nouns; namely, an article system. Just a few instances of hearing the followed by a noun should be sufficient; any infant acquiring English should immediately grasp the rule and apply it freely to the entire class of nouns. In short, we would expect to see discontinuous jumps in how children acquire language. There should be spurts in grammatical complexity every time a new rule kicks in.

language acquisition might be uncannily quick, but it arises from a painstaking process of trial and error

It’s a striking prediction. Sadly, it doesn’t stand up to findings in the field of developmental psycholinguistics. On the contrary, children appear to pick up their grammar in quite a piecemeal way. For instance, focusing on the use of the English article system, for a long time they will apply a particular article (eg, the) only to those nouns to which they have heard it applied before. It is only later that children expand upon what they’ve heard, gradually applying articles to a wider set of nouns.

This finding seems to hold for all our grammatical categories. ‘Rules’ don’t get applied in indiscriminate jumps, as we would expect if there really was an innate blueprint for grammar. We seem to construct our language by spotting patterns in the linguistic behaviour we encounter, not by applying built-in rules. Over time, children slowly figure out how to apply the various categories they encounter. So while language acquisition might be uncannily quick, there isn’t much that’s automatic about it: it arises from a painstaking process of trial and error.

What would a language instinct look like? Well, if language emerges from a grammar gene, which lays down a special organ in our brains during development, it seems natural to suppose that language should constitute a distinct module in our mind. There should be a specific region of the brain that is its exclusive preserve, an area specialised just for language. In other words, presumably the brain’s language-processor should be encapsulated – impervious to the influence of other aspects of the mind’s functioning.

As it happens, cognitive neuroscience research from the past two decades or so has begun to lift the veil on where language is processed in the brain. The short answer is that it is everywhere. Once upon a time, a region known as Broca’s area was believed to be the brain’s language centre. We now know that it doesn’t exclusively deal with language – it’s involved in a raft of other, non-linguistic motor behaviours. And other aspects of linguistic knowledge and processing are implicated almost everywhere in the brain. While the human brain does exhibit specialisation for processing different genres of information, such as vision, there appears not to be a dedicated spot specialised just for language.

But perhaps the uniqueness of language arises not from a ‘where’ but a ‘how’. What if there is a type of neurological processing that is unique to language, no matter where it is processed in the brain? This is the idea of ‘functional’ rather than ‘physical’ modularity. One way of demonstrating it would be to find individuals whose language abilities were normal while their intellects were degraded, and vice versa. This would provide what scientists call a ‘double dissociation’ – a demonstration of the mutual independence of verbal and non-verbal faculties.

For a Universal Grammar to be hard-wired into the human brain, it would need to be passed on via the genes

In his book The Language Instinct (1994), Steven Pinker examined various suggestive language pathologies in order to make the case for just such a dissociation. For example, some children suffer from what is known as Specific Language Impairment (SLI) – their general intellect seems normal but they struggle with particular verbal tasks, stumbling on certain grammar rules and so on. That seems like a convincing smoking gun – or it would, if it hadn’t turned out that SLI is really just an inability to process fine auditory details. It is a consequence of a motor deficit, in other words, rather than a specifically linguistic one. Similar stories can be told about each of Pinker’s other alleged dissociations: the verbal problems always turn out to be rooted in something other than language.

The preceding arguments all suggest that there isn’t any dedicated language organ in the brain. An alternative line of evidence implies something even stronger: that there couldn’t be such a thing. For a Universal Grammar to be hard-wired into the micro-circuitry of the human brain, it would need to be passed on via the genes. But recent research in neurobiology suggests that human DNA just doesn’t have anything like the coding power needed to do this. Our genome has a highly restricted information capacity. A significant amount of our genetic code is taken up with building a nervous system, even before it gets started on anything else. To write something as detailed and specific as knowledge of a putative Universal Grammar inside a human infant’s brain would use up huge informational resources – resources that our DNA just can’t spare. So the basic premise of the language instinct – that such a thing could be transmitted genetically – seems doubtful.

There is one last big problem for the idea of a Universal Grammar. That is its strange implications for human evolution. If language is genetically hard-wired, then it self-evidently had to emerge at some point in our evolutionary lineage. As it happens, when Chomsky was developing the theory, language was generally assumed to be absent in other species in our genus, such as Neanderthal man. This would seem to narrow the window of opportunity during which it could have emerged. Meanwhile, the relatively late appearance of sophisticated human culture around 50,000 years ago (think complex tool-making, jewellery, cave-art and so on) seemed to both require and confirm this late emergence. Chomsky argued that it might have appeared on the scene as little as 100,000 years ago, and that it must have arisen from a genetic mutation.

Stop and think about this: it is a very weird idea. For one thing, Chomsky’s claim is that language came about through a macro-mutation: a discontinuous jump. But this is at odds with the modern neo-Darwinian synthesis, widely accepted as fact, which has no place for such large-scale and unprecedented leaps. Adaptations just don’t pop up fully formed. Moreover, a bizarre consequence of Chomsky’s position is that language couldn’t have evolved for the purpose of communication: after all, even if a grammar gene could have sprung up out of the blue in one lucky individual (already vanishingly unlikely), the chances of two individuals getting the same chance mutation, at exactly the same time, is even less credible. And so, according to the theory of the language instinct, the world’s first language-equipped human presumably had no one to talk to.

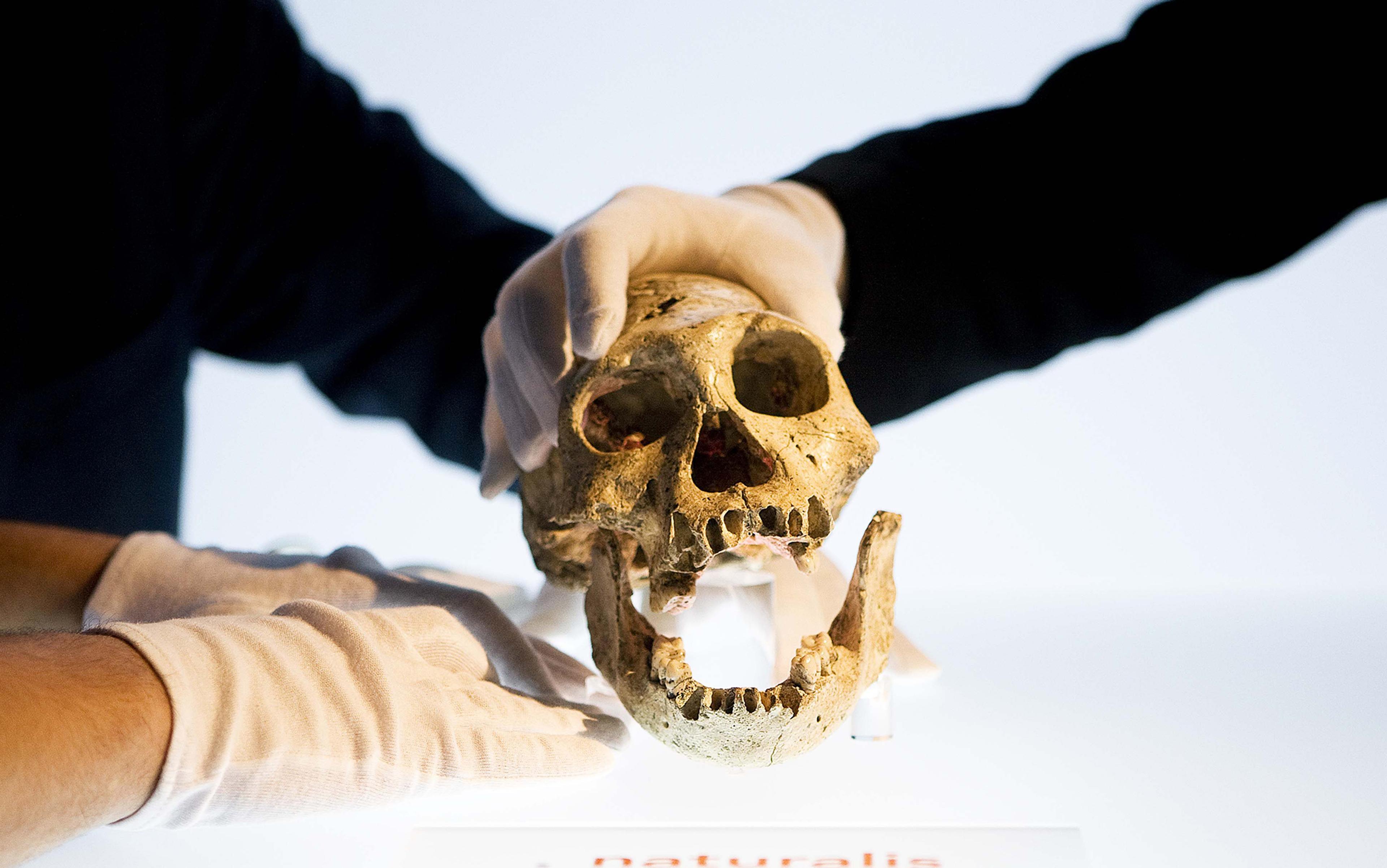

Something seems to have gone wrong somewhere. And indeed, we now believe that several of Chomsky’s evolutionary assumptions were incorrect. Recent reconstructions of the Neanderthal vocal tract show that Neanderthals probably did, in fact, have some speech capacity, perhaps very modern in quality. It is also becoming clear that, far from the dumb brutes of popular myth, they had a sophisticated material culture – including the ability to create cave engravings and produce sophisticated stone tools – not dissimilar to aspects of the human cultural explosion of 50,000 years ago. It is hard to see how they could have managed the complex learning and co-operation required for that if they didn’t have language. Moreover, recent genetic analysis reveals there was a fair bit of interbreeding that took place – most modern humans have a few bits of distinctively Neanderthal DNA. Far from modern humans arriving on the scene and wiping out the hapless ape men, it now looks like early Homo sapiens and Homo neanderthalensis could have co-habited and interbred. It does not seem farfetched to speculate that they might also have communicated with one another.

This is all very well, but questions remain. Why is it that today, only humans have language, the most complex of animal behaviours? Surely something must have happened to cut us off from our nearest surviving relatives. The challenge for the anti‑language-instinct camp is to say what that might be. And a likely explanation comes from what we might dub our ‘co‑operative intelligence’, and the events that set it in motion, more than 2 million years ago.

Our lineage, Homo, dates back around 2.5 million years. Before that, our nearest forebears were essentially upright apes known as Australopithecines, creatures that were probably about as smart as chimpanzees. But at some point, something in their ecological niche must have shifted. These early pre-humans moved from a fruit-based diet – like most of today’s great apes – to meat. The new diet required novel social arrangements and a new type of co‑operative strategy (it’s hard to hunt big game alone). This in turn seems to have entailed new forms of co‑operative thought more generally: social arrangements arose to guarantee hunters an equal share of the bounty, and to ensure that women and children who were less able to participate also got a share.

According to the US comparative psychologist Michael Tomasello, by the time the common ancestor of Homo sapiens and Homo neanderthalensis had emerged sometime around 300,000 years ago, ancestral humans had already developed a sophisticated type of co‑operative intelligence. This much is evident from the archaeological record, which demonstrates the complex social living and interactional arrangements among ancestral humans. They probably had symbol use – which prefigures language – and the ability to engage in recursive thought (a consequence, on some accounts, of the slow emergence of an increasingly sophisticated symbolic grammar). Their new ecological situation would have led, inexorably, to changes in human behaviour. Tool-use would have been required, and co‑operative hunting, as well as new social arrangements – such as agreements to safeguard monogamous breeding privileges while males were away on hunts.

we don’t have to assume a special language instinct; we just need to look at the sorts of changes that made us who we are

These new social pressures would have precipitated changes in brain organisation. In time, we would see a capacity for language. Language is, after all, the paradigmatic example of co‑operative behaviour: it requires conventions – norms that are agreed within a community – and it can be deployed to co‑ordinate all the additional complex behaviours that the new niche demanded.

From this perspective, we don’t have to assume a special language instinct; we just need to look at the sorts of changes that made us who we are, the changes that paved the way for speech. This allows us to picture the emergence of language as a gradual process from many overlapping tendencies. It might have begun as a sophisticated gestural system, for example, only later progressing to its vocal manifestations. But surely the most profound spur on the road to speech would have been the development of our instinct for co‑operation. By this, I don’t mean to say that we always get on. But we do almost always recognise other humans as minded creatures, like us, who have thoughts and feelings that we can attempt to influence.

We see this instinct at work in human infants as they attempt to acquire their mother tongue. Children have far more sophisticated learning capacities than Chomsky foresaw. They are able to deploy sophisticated intention-recognition abilities from a young age, perhaps as early as nine months old, in order to begin to figure out the communicative purposes of the adults around them. And this is, ultimately, an outcome of our co‑operative minds. Which is not to belittle language: once it came into being, it allowed us to shape the world to our will – for better or for worse. It unleashed humanity’s tremendous powers of invention and transformation. But it didn’t come out of nowhere, and it doesn’t stand apart from the rest of life. At last, in the 21st century, we are in a position to jettison the myth of Universal Grammar, and to start seeing this unique aspect of our humanity as it really is.